VMware Reservations and Avaya Aura Communication Manager

With great power comes great responsibility..... Avaya Aura applications can now be deployed onto multiple virtualization platforms from Google Cloud, AWS and VMWare to name a few options. With these new deployment options care still needs to be taken to ensure that the applications have the needed resources (CPU, Disk, Memory and Network) they need to run properly.

In this blog post we want to show what can happen to Communication Manager servers when we do not follow the recommended practice of leaving the memory and CPU reservations in place that are requested at the time of deployment.

An issue we received in our service desk

We had duplicated Communication Manager servers running on VMware that interchanged and became unstable after the interchange. The servers were running Avaya Aura Communication Manager 8.1.1 with the service packs shown below.

Output from the swversion command below

|

dadmin@server> swversion

Operating system: Linux 3.10.0-1062.1.2.el7.x86_64 x86_64 x86_64

Built: Sep 16 14:19 2019

Contains: 01.0.890.0

CM Reports as: R018x.01.0.890.0

CM Release String: vcm-018-01.0.890.0

RTS Version: CM 8.1.1.0.0.890.25763

Publication Date: 10 April 2019

VMwaretools version: 10.2.5.3619 (build-8068406)

App Deployment: Virtual Machine

VM Environment: VMware

UPDATES:

Update ID Status Type Update description

--------------------------------- ------------ ----- ---------------------------

01.0.890.0-25763 activated cold 8.1.1.0.0-FP1

KERNEL-3.10.0-1062.1.2.el7 activated cold kernel patch KERNEL-3.10.0-

Platform/Security ID Status Type Update description

--------------------------------- ------------ ----- ---------------------------

PLAT-rhel7.6-0020 activated cold RHEL7.6-SSP002

CM Translation Saved: 2020-05-04 22:00:19

CM License Installed: 2020-05-04 20:47:02

CM Memory Config: Large

|

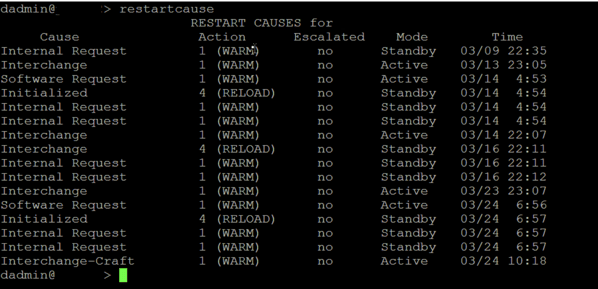

The issue occurred when the servers transitioned between active and standby, the transition times are shown below by looking at the restartcause command from Linux.

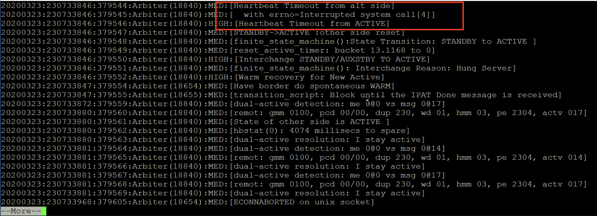

Knowing that the issue occurred at 2020-0324-06:57 we looked at the log file that was created before this time stamp in /var/log/ecs/

We saw the following in the file highlighted in red.

This would normally signal an issue with the network but these two Communication Manager servers are virtual machines connected by a virtual network so there is no real physical network between them. Due to the high speeds and low latency of these virtual networks, it is not very likely that an underlying network is truly the cause of our issue.

Cause of our issue?

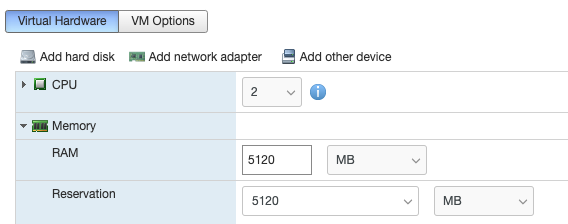

Since these are virtual machines it is important that we check the reservations for CPU and memory that are given to the virtual machine. These reservations essentially set aside a certain amount of memory and CPU from VMware and allocate it to our virtual machines so it is always available. This way if the resources on the physical servers ever get scarce we can be assured that our Avaya Communication Manager Virtual Machines always have what they need.

Checking our resources

In order to check the resources available to each virtual machine, we can run the following commands on each Communication Manager server.

| dadmin@server> vmware-toolbox-cmd stat cpures 0 MHz dadmin@server> vmware-toolbox-cmd stat memres 0 MB |

These commands should return a value that was determined at the time of deployment depending on what architecture was selected.

For Communication Manager, you can review this link and go to page 17.

But in our case these commands returned zero so steps need to be taken to shut down the virtual machines, adjust the CPU and Memory reservations in VMware according to the referenced link and turn the virtual machines back on.

Verify with the vmware-toolbox-cmd commands after the VMware adjustments have been made to verify they are correct.

| dadmin@server> vmware-toolbox-cmd stat cpures 6510 MHz dadmin@server> vmware-toolbox-cmd stat memres 5120 MB dadmin@server> |

This will give the virtual machine the correct resources that it needs to operate correctly.